Autoregressive language models I have known and loved

Epistemic status: homework assignment. I wrote this in an hour or so (probably frantically, minutes before the deadline) and it is not up to my usual quality standards (prof had no critical feedback but that’s a very low bar). It may or may not be useful to anyone, but there’s a reason this site is at

unoriginal.blog. These are mainly published so I can cite them in other articles. Corrections are especially welcome here—send to[email protected].

Introduction

Large language models like ChatGPT and GPT-4 have led to a sea change in AI use, profoundly impacting society by enabling a wide range of applications:

- Accelerated development through tools such as GitHub Copilot

- Data science: extracting structured data from very unstructured sources

- Accessibility through applications such as Be My Eyes

- Customer support: banishing the phone tree to history

- In education: automated tutoring, test generation, etc.

But just as important as these is what the research behind these models has done for the field of artificial intelligence. Generations of science fiction readers—me included—grew up reading about computers that could converse and understand users through prose. Until just a year or two ago, these feats were squarely in the realm of fiction. I was blown away the first time I played with GPT-3, and a couple of years later with ChatGPT; the computer was talking to me! The innovation seemed to come out of nowhere. But that is not quite true: the ideas behind ChatGPT have a few years of history (which, in ML-time, is decades).

The GPT lineage

ChatGPT (and later GPT-4) did not quite come out of nowhere, though: there were several predecessor models which first introduced the concepts that culminated in the models that have become household names.

GPT-1

Introduced by OpenAI in June 2018 with the paper “Improving Language Understanding by Generative Pre-training,” GPT-1 (Generative Pre-trained Transformer 1) marked the beginning of the GPT lineage. It combined two ideas flourishing in the machine learning community at the time:

- In 2017, Google researchers invented the Transformer, a more efficient type of language model than previous types such as (in something resembling decreasing order of recency and complexity) LSTMs (Long/Short Term Memory networks), RNNs (Recurrent Neural Networks), HMMs (Hidden Markov Models), and n-gram models. Transformers have proven much more scalable and easy to train than these other types.

- Pre-training, in comparison, was an older technique: since the early 2000s, researchers in machine learning had found that even if their system was intended to do a very specific task, often trained using supervised learning and thus a dataset requiring more human intervention, it still paid off to start with an unsupervised training step where the model learns from a larger pool of unstructured data before a fine-tuning phase where the “base model” (as it later came to be called) was further trained to do the specific task in question.

GPT-1, as its name implies, leveraged the Transformer architecture and was pre-trained on a large collection of books. (Text from books is often more valuable than text from the web for LLMs because book data contains “long-range dependencies”, i.e. what’s on page 1 of a book has a bearing on page 10, but the same cannot be said for relatively short web content.) It featured 117 million parameters, which believe it or not was a large amount at the time. This foundational model showcased the potential of transformers in language understanding and generation, achieving significant improvements over existing models at the time for tasks such as grammar checking, question answering, and commonsense reasoning. (Though it was still very bad at these by modern AI or human standards!)

GPT-2

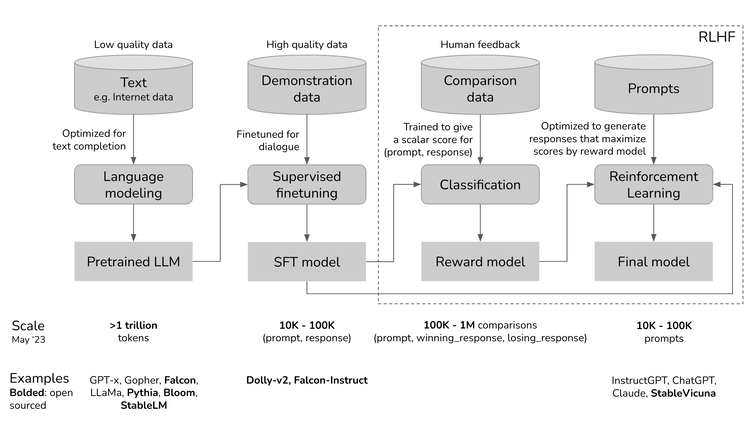

Building on the success of its predecessor, GPT-2 was released in February 2019 with the paper “Language Models are Unsupervised Multitask Learners.” With 1.5 billion parameters, GPT-2’s scaled-up design allowed for the generation of more coherent and contextually relevant text. It was pre-trained on an expanded 40GB dataset, enabling it to demonstrate an ability to perform various linguistic tasks. However, due to ethical concerns, such as the potential for misuse, the most powerful version of the model was initially not fully released to the public. In my opinion, the most important advancement that came with GPT-2 was Reinforcement Learning from Human Feedback (RLHF). “Base” GPT-2, like GPT-1, has the sole objective of predicting the next words in a sequence. If you ask it a question, it’ll start asking you questions, because it thinks your prompt would be most likely to occur in a list of questions. Obviously this is no good for chat and other fine-tuned applications. So OpenAI researchers such as Paul Christiano turned to reinforcement learning (RL), a dominant approach in other AI areas where a model is optimized to gain intermittent/delayed “reward” of some sort. Reinforcement Learning from Human Feedback (RLHF) uses human-generated feedback, rather than relying on predefined reward functions. Human feedback is used to construct or refine a reward model that captures human judgments about the desirability of different behaviors. The agent is then fine-tuned using a reinforcement learning algorithm such as Proximal Policy Optimization (PPO), which nudges the model in the direction of adhering to the inferred reward model. The end result is under RLHF, base models learn to do what their designers reward them for in the fine-tuning step.

GPT-3

GPT-3 was a landmark release by OpenAI in June 2020, as detailed in “Language Models are Few-Shot Learners.” Breaking records with 175 billion parameters, GPT-3 was able to perform tasks in a few-shot or even zero-shot manner, wherein it required little to no task-specific data. The model could adapt to a myriad of tasks, from translation to creative writing, showcasing an unprecedented level of versatility and setting a new standard for language models.

In the original paper, GPT-3 was mostly tested using carefully written prompts, so that even the base model could do creative writing, translation, etc. (by prompting in such a way that the answers are the most likely things to come next). However, soon OpenAI was combining the main innovation of GPT-3 (scale) with that of GPT-2 (RLHF, and moar scale).

GPT-3.5

After the release of GPT-3, OpenAI made several fine-tuned variants of the model designed to complete different tasks.

WebGPT

One of the first applications of RLHF, and the first to GPT-3(.5) as far as I’m aware, was WebGPT, introduced in “WebGPT: Browser-assisted question-answering with human feedback”. As early as 2020 OpenAI realized GPT models, even those otherwise good at text completion, would “hallucinate” false info (as many of us have learned the hard way with ChatGPT). Thus to improve “factuality” of these models, OpenAI used supervised fine-tuning and RLHF to teach GPT-3 to use a text-based browser and cite its sources.

InstructGPT

InstructGPT further refined the RLHF techniques used in WebGPT and GPT-2. It was designed to better follow instructions than base GPT-3, aligning the model’s responses more closely with user intentions. RLHF came into its own with InstructGPT, resulting in an AI that was significantly better at understanding and generating responses in line with specific user commands. Judging from examples in the paper, InstructGPT was almost as good at general question answering as ChatGPT would become.

As WebGPT and InstructGPT were being developed, OpenAI also continued pre-training GPT-3, resulting in a noticeably smarter base model known as GPT-3.5. This model is often just referred to as GPT-3, though, as it’s a direct continuation of its training process.

ChatGPT

Building on the concept of RLHF, OpenAI released ChatGPT on November 30th, 2022. It improved on InstructGPT’s conversational abilities by fine-tuning on dialogue-based data, enhancing performance in multi-turn conversations. It essentially just scaled the training and techniques of the previous few models. I think ChatGPT itself needs no introduction at this point.

GPT-4

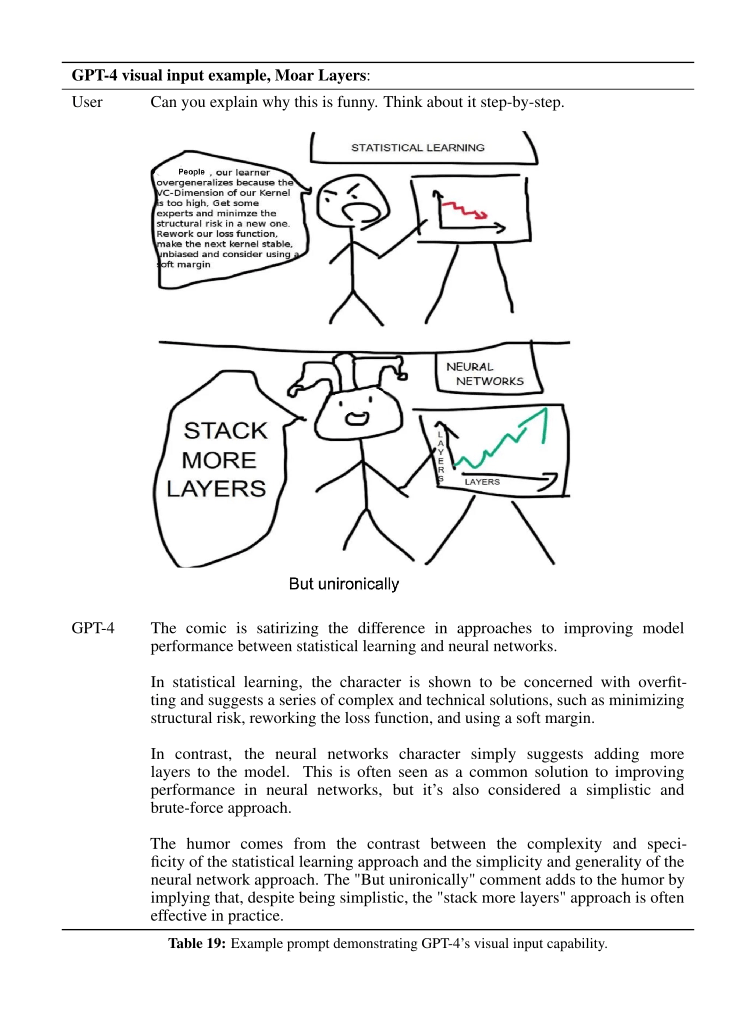

Finally, most recently, OpenAI released GPT-4 in March 2023, drawing the attention of the AI community with its sophisticated capabilities. GPT-4 is a “multimodal” model that can accept both text and image inputs. Due to safety concerns (GPT-4 could start—sort of already has—an AI arms race!) the exact architecture was not disclosed, though rumor has it it’s an 8 x 220B Mixture of Experts model, putting it over the (other) rumored 1 trillion parameters in total! (Though not in any one inference.) Capable of processing more complex and nuanced prompts, it showed improved understanding and generation across a wide spectrum of languages and tasks. GPT-4’s training incorporated structured data and lessons learned from previous versions, including RLHF, to reduce harmful and misleading outputs. The advancements in GPT-4 indicated OpenAI’s continuous endeavor to enhance the efficiency and safety of generative AI models. GPT-4V was even smart enough to explain memes:

Training costs

Source for parameter counts, HW training costs.

GPT-1

GPT-1 is puny by modern standards, with only 117 million parameters. It was trained for 30 days on a meager 8 P600 GPUs. The MSRP of a P600 is $178, so a machine that could train a GPT-1 per month in perpetuity would cost only a bit more than $1000. If we assume such a machine lasted for 10 years, the cost of training GPT-1 would be about $10.

GPT-2

(The largest) GPT-2 is an order of magnitude larger, but hardware had progressed by the time it was trained. Unfortunately, OpenAI had started to become more secretive about model training by 2019, so the main thing we have to go off of is that they used 256 Google TPUv3 units, likely in a Google Cloud TPU Pod. In 2019, a 256-core slice of a Cloud TPU v3 Pod cost $256/hr (at the “evaluation price”, which OpenAI might have negotiated a discount on—we can’t know for sure). Sources are slim on how long training took, but some guy on Reddit claims “training took a bit over a week.” Using this info we can estimate the training cost of GPT-2 at around $45k.

GPT-3

GPT-3 is well-known and popular enough that someone has already written the article estimating its cost of training. It’s mentioned in the GPT-3 paper that the models were trained on a cluster of V100 GPUs, and the total compute necessary was 3.14x1023 FLOPs (floating point operations). Given a V100 theoretically provides 2.8x1013 FLOPs/s, at 2020 Lambda Labs cloud GPU rental prices, “even at [the] lowest 3 year reserved cloud pricing we could find, this will take 355 GPU-years and cost $4.6M”.

GPT-4

Finally, we even know even less about GPT-4’s architecture or its training setup, but we do have an absurd lower bound on its cost: according to Wired, “at [an] MIT event, [OpenAI CEO Sam] Altman was asked if training GPT-4 cost $100 million; he replied, ‘It’s more than that.’”

Caveats

Of course, none of these costs include factors such as engineer time and office space rent, because those are so contingent on the situation. Another factor I can’t account for is hyperparameter tuning: to discover the best “type” of model to train, one needs to attempt training many copies of the model with different pre-set parameters such as loss rate. (I’m unsure of the details of these parameters, but I know this step exists.)

Appendix: AI Use

I used Anthropic’s Claude and OpenAI’s GPT-4 via API for a few things in writing this, to varied results:

- I sometimes asked GPT-4 to define ML jargon I was unfamiliar with.

- I tried to have Claude and/or GPT-4 write the “GPT lineage” section. Both results sounded OK, but were full of hallucinations/confabulations of paper titles, years, features, etc. It even got the order of GPT-3.5, InstructGPT, WebGPT wrong. I thought it particularly ironic that GPT-4 Turbo didn’t know about GPT-4 (non-Turbo; should be within its training data). I manually researched the release date, paper title, etc. of each model/model variant, replaced incorrect paper titles, had it rewrite the GPT-3.5 stuff it got seriously wrong after providing it a list of the actual facts about each model, and then rewrote most of that by hand anyway because it wasn’t very good. For each model, I only had it write the first paragraph about when a model was introduced, in what paper, the impact on ML, etc. I ended up writing the majority of the exposition on each model myself.

- When I was done with the assignment, I fed it and the assignment instructions to GPT-4 to see if I was missing any of the requirements.